I’ve been researching Continuous Integration (CI) and unit testing in iOS lately and learning a lot about testing your application. So far, I’ve learned how to write unit tests in Xcode, some basic guidelines for writing those tests, and experimented with Jenkins CI server to create automated builds, tests, email notifications, and archives. This post is meant to be a collection of my thoughts and takeaways.

Two Types of Unit Tests

The are two main types of unit tests in objective-c, logic tests and application tests. There also two general approaches to unit testing, which happen to correlate: bottom up and top down. In bottom up testing, you look test each method or class individually, entirely independently of each other. In top down testing, you test the functionality of the app as a whole.

Because your logic tests are independent of each other, they can run without needing the context of your application’s controllers or views. Logic tests can only be run on the simulator. Application tests are (appropriately) run in the context of a host app, and can be run either on a device or in the simulator.

Efficiency in Unit Testing

After figuring out a how to create unit tests, run them, and seeing the little green checkboxes telling me they passed my first reaction was to get a little unit-test-happy. I was thinking oh, I can test this, and that, and all of that… Well I’m finding that there’s a balance between efficient, high-quality unit tests and simply testing every single input and output.

When you test parsing a JSON response from your server, for example, ONE way to do it is to assert that the final property value is equal to the original value in the JSON. There are however, many more ways to test your parsing and relational mapping. You might try testing for valid data using character sets, checking string lengths are greater than zero, or that birthdates are before today’s date. Then, try 4 or 5 different data sets, rather than a single one.

Basically, rather than test for a specific outcome with your logic tests, be a little more broad. Trying to test for a very specific output might be beneficial in some cases, but it can quickly become tedious and time-consuming. Testing for types of data, unsupported input characters, and invalid states can cover more errant cases in less time. Significantly less time.

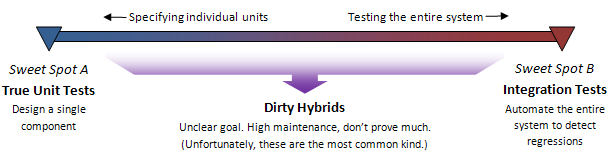

The Sweet Spot

I found this graphic in a blog post about unit testing best practices about halfway through my research and was really glad I did. Essentially, unit tests should hit the sweet spot of testing individual units (bottom-up) or testing the entire system (top-down) and not fall into a dirty hybrid that only costs additional time and effort without proving much.